If Microsoft wants to safeguard “democratic freedoms,” why did it fund an Israeli facial recognition firm involved in secret military surveillance of Palestinians?

If Microsoft wants to safeguard “democratic freedoms,” why did it fund an Israeli facial recognition firm involved in secret military surveillance of Palestinians?

Microsoft committed to protecting democratic freedoms. Then it funded an Israeli facial recognition firm that secretly watched West Bank Palestinians.

Microsoft has invested in a startup that uses facial recognition to surveil Palestinians throughout the West Bank, in spite of the tech giant’s public pledge to avoid using the technology if it encroaches on democratic freedoms.

AnyVision, which is headquartered in Israel but has offices in the United States, the United Kingdom and Singapore, sells an “advanced tactical surveillance” software system, Better Tomorrow. It lets customers identify individuals and objects in any live camera feed, such as a security camera or a smartphone, and then track targets as they move between different feeds.

According to five sources familiar with the matter, AnyVision’s technology powers a secret military surveillance project throughout the West Bank. One source said the project is nicknamed "Google Ayosh," where "Ayosh" refers to the occupied Palestinian territories and "Google" denotes the technology’s ability to search for people.

The American technology company Google is not involved in the project, a spokesman said.

The surveillance project was so successful that AnyVision won the country’s top defense prize in 2018. During the presentation, Israel’s defense minister lauded the company — without using its name — for preventing “hundreds of terror attacks” using “large amounts of data.”

Palestinians living in the West Bank do not have Israeli citizenship or voting rights but are subject to movement restrictions and surveillance by the Israeli government.

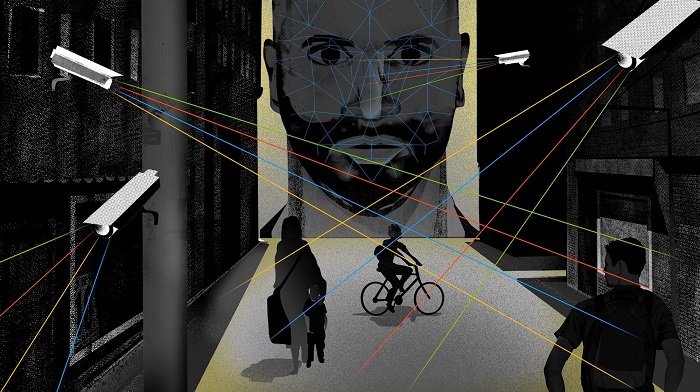

Face recognition is possibly the most perfect tool for complete government control in public spaces, so we need to treat it with extreme caution – ACLU's Shankar Narayan

The Israeli army has installed thousands of cameras and other monitoring devices across the West Bank to monitor the movements of Palestinians and deter terror attacks. Security forces and intelligence agencies also scan social media posts and use algorithms in an effort to predict the likelihood that someone will carry out a lone-wolf attack and arrest them before they do.

The addition of facial recognition technology transforms passive camera surveillance combined with the list of suspects into a much more powerful tool.

“The basic premise of a free society is that you shouldn’t be subject to tracking by the government without suspicion of wrongdoing. You are presumed innocent until proven guilty,” Shankar Narayan, technology and liberty project director at the American Civil Liberties Union, said. “The widespread use of face surveillance flips the premise of freedom on its head and you start becoming a society where everyone is tracked no matter what they do all the time.”

“Face recognition is possibly the most perfect tool for complete government control in public spaces, so we need to treat it with extreme caution. It’s hard to see how using it on a captive population [like Palestinians in the West Bank] could comply with Microsoft’s ethical principles,” he added.

When NBC News first approached AnyVision for an interview, CEO Eylon Etshtein denied any knowledge of "Google Ayosh," threatened to sue NBC News and said that AnyVision was the “most ethical company known to man.” He disputed that the West Bank was “occupied” and questioned the motivation of the NBC News inquiry, suggesting the reporter must have been funded by a Palestinian activist group.

In subsequent written responses to NBC News’ questions and allegations, AnyVision apologized for the outburst and revised its position.

“As a private company we are not in a position to speak on behalf of any country, company or institution,” Etshtein said.

Days later, AnyVision gave a different response: “We are affirmatively denying that AnyVision is involved in any other project beyond what we have already stated [referring to the use of AnyVision’s software at West Bank border checkpoints].”

AnyVision’s technology has also been used by Israeli police to track suspects through the Israeli-controlled streets of East Jerusalem, where 3 of 5 residents are Palestinian.

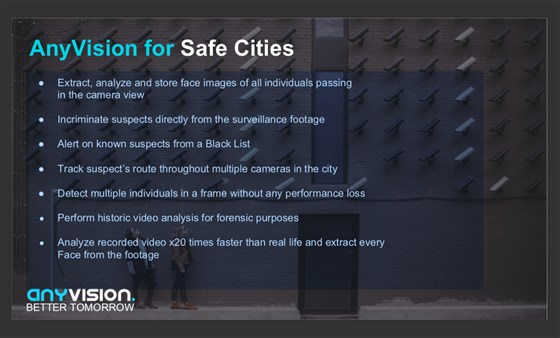

See how AnyVision’s facial recognition software tracks people through cities

One of the company’s technology demonstrations, a video obtained by NBC News, shows what purports to be live camera feeds monitoring people, including children and women wearing hijabs and abayas, as they walk through Jerusalem.

AnyVision said this did not reflect an “ongoing customer relationship,” referring to the Israeli police.

When AnyVision won the prestigious Israel Defense Prize, awarded to entities found to have “significantly improved the security of the state," the company wasn’t named in the media announcement because the surveillance project was classified. Employees were instructed not to talk about the award publicly.

However, NBC News has seen a photo of the team accepting the prize, a framed certificate that commends AnyVision for its “technological superiority and direct contribution to the prevention of terror attacks.”

A slide taken from a leaked AnyVision sales presentation, explaining how its face recognition technology can track individuals across a city.AnyVision

A slide taken from a leaked AnyVision sales presentation, explaining how its face recognition technology can track individuals across a city.AnyVision

AnyVision said it does not comment on behalf of “other companies, countries or institutions.”

“Many countries and organizations face a diverse set of threats, whether it is keeping students and teachers safe in schools, facilitating the movement of individuals in and out of everyday buildings, and other situations where innocents could face risk,” the company said in a statement. “Our fundamental mission is to help keep all people safe with a best-in-class technology offering, wherever that threat may originate.”

The Israel Defense Forces (IDF) declined to comment on "Google Ayosh" or AnyVision’s receipt of the Israel Defense Prize.

Microsoft's investment

NBC’s investigation, which builds on reporting from Israeli business publication TheMarker, comes at a time when Microsoft is positioning itself as a moral leader among technology companies, a move that has shielded the company from sustained public criticism faced by others such as Facebook and Google. The company’s investment in AnyVision raises questions about how it applies its ethical principles in practice.

“Microsoft takes these mass surveillance allegations seriously because they would violate our facial recognition principles,” a Microsoft spokesman said.

“If we discover any violation of our principles, we will end our relationship.”

“All of our installations have been examined and confirmed against not only Microsoft’s ethical principles, but also our own internal rigorous approval process,” AnyVision said.

In June, Microsoft’s venture capital arm M12 announced it would invest in AnyVision as part of a $74 million Series A funding round, along with Silicon Valley venture capital firm DFJ. The deal sparked criticism from human rights activists who argued — as Forbes reported — that the investment was incompatible with Microsoft’s public statements about ethical standards for facial recognition technology.

While there are benign applications for facial recognition, such as unlocking your smartphone, the technology is controversial because it can be used to facilitate mass surveillance, exacerbate human bias in policing and infringe on people’s civil liberties. Because of this, several U.S. cities, including San Francisco, Oakland and Berkeley in California and Somerville, Massachusetts, have banned the use of the software by the police and other agencies.

In a December 2018 blog post, Microsoft President Brad Smith said, “We need to be clear-eyed about the risks and potential for abuse” and called for government regulation of facial-recognition technology. He noted that it can “lead to new intrusions into people’s privacy” and that when used by a government for mass surveillance can “encroach on democratic freedoms.”

Microsoft's president Brad Smith has called for government regulation of facial-recognition technology and noted in a December 2018 blog post that it can "lead to new intrusions on people's privacy" and "encroach on democratic freedoms"Chona Kasinger / Bloomberg via Getty Images

Microsoft's president Brad Smith has called for government regulation of facial-recognition technology and noted in a December 2018 blog post that it can "lead to new intrusions on people's privacy" and "encroach on democratic freedoms"Chona Kasinger / Bloomberg via Getty Images

Microsoft also unveiled six ethical principles to guide its facial recognition work: fairness, transparency, accountability, nondiscrimination, notice and consent and lawful surveillance. The last principle states: “We will advocate for safeguards for people’s democratic freedoms in law enforcement surveillance scenarios and will not deploy facial recognition technology in scenarios that we believe will put these freedoms at risk."

Microsoft said that AnyVision agreed to comply with these principles as part of M12’s investment and secured audit rights to ensure compliance.

"We are proceeding with a third-party audit and asked for a robust board level review and compliance process. AnyVision has agreed to both," a Microsoft spokesman said.

Microsoft declined to explain how, exactly, it defined these principles or how it verified AnyVision’s compliance prior to investing.

“They seem to believe they can have their cake and eat it, that ethical principles just exist in the abstract and don’t have to engage with real-world politics. But their technologies do, which means that they do,” said Os Keyes, from the University of Washington, who researches the ethics of facial recognition.

'Our mission is to help keep people safe'

Several former AnyVision employees, who did not want to be named because they had signed nondisclosure agreements and feared retaliation, told NBC News that the company did not adhere to Microsoft’s ethical standards.

“Ultimately, I saw no evidence that ethical considerations drove any business decisions,” one former employee said.

They also described a cut-throat culture, where the pressure to sell technology to corporate, government and military clients overrode moral questions around the application of the technology.

All of the former employees NBC News spoke to said they left because of broken promises over bonuses and other compensation and ethical questions over how the technology was being marketed and used in practice.

“There’s a certain amount of ‘fake it until you make it’ with startups but let’s just say their definition of the truth is quite a bit more flexible than mine,” one said.

Another suggested that AnyVision may have made similar misrepresentations to investors like Microsoft.

AnyVision told NBC News that it reviewed all of its customers and use cases for compliance with Microsoft’s ethical standards and found nothing in violation. It did not provide any specific details about the compliance process.

“While we are working very hard to meet and beat our commercial KPIs (key performance indicators), it is never at the expense of ethical considerations,” AnyVision said.

AnyVision said that staffing changes were a difficult but expected part of being a “rapidly growing startup.”

“Fundamentally our mission is to help keep people safe, improve daily life and do so in an ethical manner,” Etshtein said.

AnyVision's military ties

AnyVision, which launched in 2015 with what it claimed to be a “world-leading face recognition algorithm,” has close ties to Israel’s military and intelligence services. It counts former head of Mossad Tamir Pardo among its board of advisers. Amir Kain, who was director of the Defense Ministry’s security department from 2007 to 2015, is AnyVision’s president. Several current employees did their national military service at elite cyberspy agency Unit 8200, equivalent to the NSA or the United Kingdom's GCHQ.

The company’s core product is designed to pick out the faces of multiple suspects in a large crowd, monitor crowd density and track and categorize different types of vehicles, according to promotional materials. AnyVision has described this system as “nonvoluntary” because individuals do not need to enroll to be detected automatically. The company claims to have deployed its technology across more than 115,000 cameras.

AnyVision has publicly acknowledged one of its projects in the West Bank — the provision of facial recognition technology at 27 of the checkpoints Palestinians must use to cross into Israel. Migrant workers place their ID cards on a sensor and stare into a camera that uses face recognition to verify their identity. AnyVision said in an August blog post that the technology “drastically decreases wait times at border crossings” and provides an “unbiased safeguard at the border to detect and deter persons who have committed unlawful activities.” This screening is not used at the separate West Bank checkpoints Israelis drive through.

In 2007, based on conversations with former Israeli officials, Harvard University researcher Yael Berda estimated that the Israeli government had a list of about 200,000 potential terrorists in the West Bank that it wanted to monitor — about a fifth of the West Bank’s male population at the time — as well as a list of 65,000 individuals deemed to pose a criminal risk. Both lists included people suspected of specific offenses, as well as people considered worthy of scrutiny for other reasons — for example, criticizing Israel on Facebook or living in a village where Hamas is popular.

“It’s not limited to people suspected of actual militancy or training or anything,” said Berda, who has written a book about the bureaucracy of Israel’s occupation.

The IDF declined to comment on how many people it has under surveillance in the West Bank currently.

“There has never been a surveillance technology that hasn’t disproportionately impacted already marginalized communities,” Narayan said. “And it’s still unclear whether a perfectly unbiased face surveillance system can coexist with democracy.”

AnyVision has a second product, called SesaMe, that offers identity verification for banking and smartphone applications.

Beyond its work with the Israeli government, the company has sold its facial recognition software to casinos, sports stadiums, retailers and theme parks across the U.S. AnyVision demonstrated, but said it didn’t sell, the technology to Customs and Border Protection in Arizona as a modular component for a surveillance truck. It also approached high schools after mass shootings, including one in Santa Fe, Texas, to offer facial recognition technology as a means to keep children safer.

The company’s technology was tested in Russia at Moscow’s Domodedovo Airport in 2018, but AnyVision said it had terminated all activity there. Nigerian security company Cyber Dome told NBC News that it sold AnyVision’s technology to local corporate customers. AnyVision denied this.

AnyVision’s executives considered the West Bank to be a testing ground for its surveillance technology, one former employee said.

“It was heavily communicated to us [by AnyVision’s leadership] that the Israeli government was the proof of concept for everything we were doing globally. The technology was field-tested in one of the world’s most demanding security environments and we were now rolling it out to the rest of the market,” he said.

“Israel was the first territory where we validated our technology,” Etshtein said. “However, today more than 95 percent of our revenue is generated from end customers outside of Israel.”

Human rights and civil liberties groups were concerned about what they saw as the use of Palestinians as guinea pigs for surveillance technology exports.

The ACLU’s Shankar Narayan described the practice as “very troubling but far from new.”

“One of the dirty little secrets of AI and face recognition is that in order to make it more accurate, there is this hunger for datasets. So many entities have engaged in what I would say were unethical practices with regards to getting more datasets,” he said.

Palestinian activists questioned the use of face recognition at the border checkpoints

“Strengthening the checkpoints with face recognition means more sustainability for the occupation,” said Nadim Nashif, executive director and co-founder of 7amleh, a nonprofit that advocates for Palestinian human rights. “Palestinians want full rights like any other human being without being restricted in their freedom of movement.”

He added that it was sad that big American companies like Microsoft publicly talk about human rights compliance at a “declarative level” but then invest in companies like AnyVision “without thoroughly checking or restricting their operations.”

Source: nbcnews.com