It used to be that server logs were just boring utility files whose most dramatic moments came when someone forgot to write a script to wipe out the old ones and so they were left to accumulate until they filled the computer’s hard-drive and crashed the server.

Then, a series of weird accidents turned server logs into the signature motif of the 21st century, a kind of eternal, ubiquitous exhaust from our daily lives, the CO2 of the Internet: invisible, seemingly innocuous, but harmful enough, in aggregate, to destroy our world.

Here’s how that happened: first, there were cookies. People running web-servers wanted a way to interact with the people who were using them: a way, for example, to remember your preferences from visit to visit, or to identify you through several screens’ worth of interactions as you filled and cashed out a virtual shopping cart.

Then, Google and a few other companies came up with a business model. When Google started, no one could figure out how the company would ever repay its investors, especially as the upstart search-engine turned up its nose at the dirtiest practices of the industry, such as plastering its homepage with banner ads or, worst of all, selling the top results for common search terms.

Instead, Google and the other early ad-tech companies worked out that they could place ads on other people’s websites, and that those ads could act as a two-way conduit between web users and Google. Every page with a Google ad was able to both set and read a Google cookie with your browser (you could turn this off, but no one did), so that Google could get a pretty good picture of which websites you visited. That information, in turn, could be used to target you for ads, and the sites that placed Google ads on their pages would get a little money for each visitor. Advertisers could target different kinds of users – users who had searched for information about asbestos and lung cancer, about baby products, about wedding planning, about science fiction novels. The websites themselves became part of Google’s ‘‘inventory’’ where it could place the ads, but they also improved Google’s dossiers on web users and gave it a better story to sell to advertisers.

The idea caught the zeitgeist, and soon everyone was trying to figure out how to gather, aggregate, analyze, and resell data about us as we moved around the web.

Of course, there were privacy implications to all this. As early breaches and tentative litigation spread around the world, lawyers for Google and for the major publishers (and for publishing tools, the blogging tools that eventually became the ubiquitous ‘‘Content Management Systems’’ that have become the default way to publish material online) adopted boilerplate legalese, those ‘‘privacy policies’’ and ‘‘terms of service’’ and ‘‘end user license agreements’’ that are referenced at the bottom of so many of the pages you see every day, as in, ‘‘By using this website, you agree to abide by its terms of service.’’

As more and more companies twigged to the power of ‘‘surveillance capitalism,’’ these agreements proliferated, as did the need for them, because before long, everything was gathering data. As the Internet everted into the physical world and colonized our phones, we started to get a taste of what this would look like in the coming years. Apps that did innocuous things like turning your phone into a flashlight, or recording voice memos, or letting your kids join the dots on public domain clip-art, would come with ‘‘permissions’’ screens that required you to let them raid your phone for all the salient facts of your life: your phone number, e-mail address, SMSes and other messages, e-mail, location – everything that could be sensed or inferred about you by a device that you carried at all times and made privy to all your most sensitive moments.

When a backlash began, the app vendors and smartphone companies had a rebuttal ready: ‘‘You agreed to let us do this. We gave you notice of our privacy practices, and you consented.’’

This ‘‘notice and consent’’ model is absurd on its face, and yet it is surprisingly legally robust. As I write this in July of 2016, US federal appellate courts have just ruled on two cases that asked whether End User Licenses that no one read and no one understands and no one takes seriously are enforceable. The cases differed a little in their answer, but in both cases, the judges said that they were enforceable at least some of the time (and that violating them can be a felony!). These rulings come down as the entirety of America has been consumed with Pokémon Go fever, only to have a few killjoys like me point out that merely by installing the game, all those millions of players have ‘‘agreed’’ to forfeit their right to sue any of Pokémon’s corporate masters should the companies breach all that private player data. You do, however, have 30 days to opt out of this forfeiture; if Pokémon Go still exists in your timeline and you signed up for it in the past 30 days, send an e-mail to <This email address is being protected from spambots. You need JavaScript enabled to view it.; with the subject ‘‘Arbitration Opt-out Notice’’ and include in the body ‘‘a clear declaration that you are opting out of the arbitration clause in the Pokémon Go terms of service.’’

Notice and consent is an absurd legal fiction. Jonathan A. Obar and Anne Oeldorf-Hirsch, a pair of communications professors from York University and the University of Connecticut, published a working paper in 2016 called ‘‘The Biggest Lie on the Internet: Ignoring the Privacy Policies and Terms of Service Policies of Social Networking Services.’’ The paper details how the profs gave their students, who are studying license agreements and privacy, a chance to beta-test a new social network (this service was fictitious, but the students didn’t know that). To test the network, the students had to create accounts, and were given a chance to review the service’s terms of service and privacy policy, which prominently promised to give all the users’ personal data to the NSA, and demanded the students’ first-born children in return for access to the service. As you may have gathered from the paper’s title, none of the students noticed either fact, and almost none of them even glanced at the terms of service for more than a few seconds.

Indeed, you can’t examine the terms of service you interact with in any depth – it would take more than 24 hours a day just to figure out what rights you’ve given away that day. But as terrible as notice-and-consent is, at least it pretends that people should have some say in the destiny of the data that evanescences off of their lives as they move through time, space, and information.

The next generation of networked devices are literally incapable of participating in that fiction.

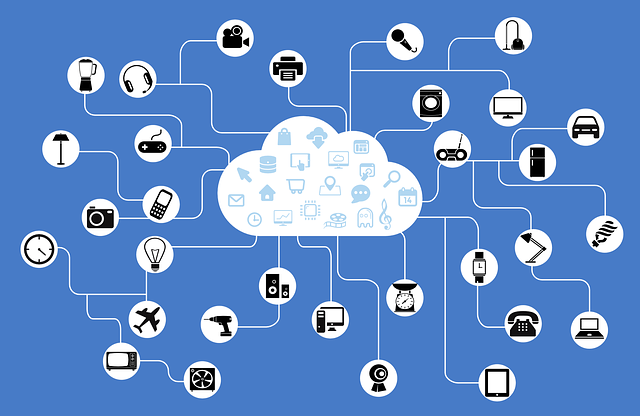

The coming Internet of Things – a terrible name that tells you that its proponents don’t yet know what it’s for, like ‘‘mobile phone’’ or ‘’3D printer’’ – will put networking capability in everything: appliances, lightbulbs, TVs, cars, medical implants, shoes, and garments. Your lightbulb doesn’t need to be able to run apps or route packets, but the tiny, commodity controllers that allow smart lightswitches to control the lights anywhere (and thus allow devices like smart thermostats and phones to integrate with your lights and home security systems) will come with full-fledged computing capability by default, because that will be more cost-efficient that customizing a chip and system for every class of devices. The thing that has driven computers so relentlessly, making them cheaper, more powerful, and more ubiquitous, is their flexibility, their character of general-purposeness. That fact of general-purposeness is inescapable and wonderful and terrible, and it means that the R&D that’s put into making computers faster for aviation benefits the computers in your phone and your heart-monitor (and vice-versa). So everything’s going to have a computer.

You will ‘‘interact’’ with hundreds, then thousands, then tens of thousands of computers every day. The vast majority of these interactions will be glancing, momentary, and with computers that have no way of displaying terms of service, much less presenting you with a button to click to give your ‘‘consent’’ to them. Every TV in the sportsbar where you go for a drink will have cameras and mics and will capture your image and process it through facial-recognition software and capture your speech and pass it back to a server for continuous speech recognition (to check whether you’re giving it a voice command). Every car that drives past you will have cameras that record your likeness and gait, that harvest the unique identifiers of your Bluetooth and other short-range radio devices, and send them to the cloud, where they’ll be merged and aggregated with other data from other sources.

In theory, if notice-and-consent was anything more than a polite fiction, none of this would happen. If notice-and-consent are necessary to make data-collection legal, then without notice-and-consent, the collection is illegal.

But that’s not the realpolitik of this stuff: the reality is that when every car has more sensors than a Google Streetview car, when every TV comes with a camera to let you control it with gestures, when every medical implant collects telemetry that is collected by a ‘‘services’’ business and sold to insurers and pharma companies, the argument will go, ‘‘All this stuff is both good and necessary – you can’t hold back progress!’’

It’s true that we can’t have self-driving cars that don’t look hard at their surroundings all the time, and pay especially close attention to humans to make sure that they’re not killing them. However, there’s nothing intrinsic to self-driving cars that says that the data they gather needs to be retained or further processed. Remember that for many years, the server logs that recorded all your interactions with the web were flushed as a matter of course, because no one could figure out what they were good for, apart from debugging problems when they occurred.

The returns from data-acquisition have been declining for years. In the early years of data-driven advertising, advertisers took it on faith that better targeting justified much higher ad-rates. Over time, some of that optimism has worn off, helped along by the fact that we have become adapted to advertising, so that targeting no longer works as well as it did in the early days. Recall that soap companies once advertised by proclaiming, ‘‘You will be cleaner, 5 cents,’’ and seem to have sold a hell of a lot of soap that way. Over time, people became inured to those messages, entering into an arms race with advertisers that takes us all the way up to those Axe Body Spray ads where the right personal hygiene products will summon literal angels to the side of an unremarkable man and, despite their wings, these angels all exude decidedly unangelic lust for our lad. The ads are always the most interesting part of old magazines, because they suggest a time when people were much more naive about the messages they believed.

But diminishing returns can be masked by more aggressive collection. If Facebook can’t figure out how to justify its ad ratecard based on the data it knows about you, it can just plot ways to find out a lot more about you and buoy up that price.

The next iteration of this is the gadgets that will spy on us from every angle, in every way, all the time. The data that these services collect will be even more toxic in its potential to harm us. Consider that today, identity thieves merge data from several breaches in order to piece together enough information to get a duplicate deed for their victims’ houses and sell those houses out from under them; that voyeurs use untargeted attacks to seize control over peoples’ laptops to capture nude photos of them and then use those to blackmail their victims to perform live sex-acts on camera; that every person who ever applied for security clearance in the USA had their data stolen by Chinese spies, who broke into the Office of Personnel Management’s servers and stole more than 20,000,000 records.

The best way to secure data is never to collect it in the first place. Data that is collected is likely to leak. Data that is collected and retained is certain to leak. A house that can be controlled by voice and gesture is a house with a camera and a microphone covering every inch of its floorplan.

The IoT will rupture notice-and-consent, but without some other legal framework to replace it, it’ll be a free-for-all that ends in catastrophe.

I’m frankly very scared of this outcome and have a hard time imagining many ways in which we can avert it, but I do have one scenario that’s plausible: class action lawsuits.

Right now, companies that breach their users’ data face virtually no liability. When Home Depot lost 53 million credit-card numbers and 56 million associated e-mail addresses, a court awarded its customers $0.34 each, along with gift certificates for credit monitoring services, whose efficacy is not borne out in the literature. But the breaches will keep on coming, and they will get worse, and entrepreneurial class-action lawyers will be spoiled for choice when it comes to clients. These no-win/no-fee lawyers represent a kind of sustained, hill-climbing iterative attack on surveillance capitalism, trying randomly varied approaches to get courts to force the corporations they sue to absorb the full social cost of their reckless data-collection and handling.

Eventually, some lawyer is going to convince a judge that, say, 1% the victims of a deep-pocketed company’s breach will end up losing their houses to identity thieves as a result of the data that the company has leaked, and that the damages should be equal to 1% of all the property owned by a 53 million (or 500 million!) customers whom the company has wronged. It will take down a Fortune 100 company, and transfer billions from investors and insurers to lawyers and their clients.

When that day comes, there’ll be blood in the boardroom. Every major investor will want to know that the company is insured for a potential award of 500X the company’s net worth. Every re-insurer and underwriter will want to know exactly what data-collection practices they’re insuring. (Indeed, even a good scare will likely bring both circumstances to reality, even if the decision is successfully appealed).

The danger, of course, is the terms of service. If every ‘‘agreement’’ you click past or flee from includes forced arbitration – that is, a surrender of your right to sue or join a class action – then there’s no class to join the class action. There’s a reason arbitration agreements have proliferated to every corner of our lives, from Airbnb and Google Fiber to several doctors and dentists whose waiting-rooms I’ve walked out of since moving back the USA last year. I even had to agree to forced arbitration to drop my daughter off at a kids’ birthday party (I’m not making this up – it was in a pizza parlor with a jungle gym).

It’s a coming storm of the century, and our umbrellas are all those water-soluble $5 numbers that materialize on New York street corners every time clouds appear in the sky. Be afraid.

Source: locusmag.com